The goal of the project is to create an algorithm in Python able to autonomously learn how to play Flappy Bird using a simple kind of neuroevolution, similar to the one used for the Obstacle Course project. However, this new program will be a bit different, since it will also exploit Greedy Algorithms.

The idea behind the game is quite simple: the red square on the left (a bird in the original game) must go through the tubes without touching them and survive as long as possible. The square is subject to gravity and, as like the bird in the game, can use its wings and fly higher.

One of the first attempts of the algorithm:

In order to create the user interface, I use the module tkinter, that makes it quite manageable to handle graphical elements.

The tubes are generated on the right side of the window at a random height, and they approach the square/bird at a fixed speed. The square starts its match at mid-height and is always subject to gravity. It dies when it collides with the tubes or when it falls to the ground, but not when it reaches the upper bound of the window, as like in the original game.

The game is completely contained in the class Game, that includes several methods, such as:

move_bird function:

def move_bird(self):

if self.jump_triggered == True and self.bird_speed_y < 0: #inizio nuovo salto

self.time_count=0

self.bird_speed_y = 10 #cambio la velocità verticale con una positiva

self.bird_speed_y0 = self.bird_speed_y

self.bird_y0 = self.bird_y #posizione verticale iniziale

self.jump_triggered = False

else: #è già in un salto

self.jump_triggered = False

self.bird_speed_y -= 0.4 #considero accelerazione verso il basso di 0.8m/s^2

self.new_y = self.bird_y0 - self.bird_speed_y0 * self.time_count + 0.2 * self.time_count * self.time_count

if self.new_y <= 90:

self.new_y = 90

self.movement_y = self.new_y - self.bird_y

self.w.move(self.bird, 0, self.movement_y)

self.bird_y = self.new_y

self.time_count+=1

Once the game is ready, the algorithm has to learn to play with it. It will have access to two numbers: the horizontal distance from the tubes and the vertical distance from the top of the lower one. Using this information, the algorithm will have to decide whether to flap its wings or not. These two inputs will be interpreted by neural networks structured in this way:

The algorithm I've written is a kind of greedy algorithm applied to the weights and biases of the considered neural network. The programm will start from a neural network initialised at random, it will use it to play a fixed number of games and give it as a score the average time it survived in those games.

After having tested the first neural network, the programm will create a slightly mutated copy and test it in the same way as the first. If the score improves, the new network will take the place of the first one and will be used to create new networks, otherwise another mutation of the original network will be tested.

Some of the methods in Game that handle the greedy approach:

def check_score(self):

if self.num_move % 1 == 0:

txt_for_print = "G: " + str(self.num_move) + " BEST: " + str(round((self.best_score), 4)) + " | " + str(round((self.last_score), 4))

print(txt_for_print)

if self.last_score >= self.best_score:

self.best_score = self.last_score

self.accept_move()

self.propose_move()

else:

self.move_attempt += 1

self.propose_move()

def accept_move(self):

self.move_attempt = 0

self.config.set_weights_as(self.config_test)

def propose_move(self):

self.num_move += 1

mutation_rate = 0.02

self.config_test.set_mutation_of(self.config, rate=mutation_rate)

The neural networks are handled by a NeuralNetwork class that not only handles layers, neurons and computations, but also contains some specific methods that are useful both for greedy and genetic algorithms. For example:

set_mutation_of() function:

def set_mutation_of(self, other, rate=0.10):

if not isinstance(other, NeuralNetwork) or not np.all(self.layers_sizes == other.layers_sizes):

raise Exception("This works only with another NN of same size")

self.set_weights_as(other)

for l in range(self.n_layers-1):

random_ws = np.random.rand(self.layers_sizes[l], self.layers_sizes[l+1]) * 2 - 1

mask_w = np.random.rand(self.layers_sizes[l], self.layers_sizes[l+1]) < rate

self.weights[l][mask_w] = random_ws[mask_w]

random_bs = np.random.rand(self.layers_sizes[l+1]) * 2 - 1

mask_b = np.random.rand(self.layers_sizes[l+1]) < rate

self.biases[l][mask_b] = random_bs[mask_b]

Completing this project has taken, in total, almost two years. I have changed the approach several times, I have interrupted it even more times, it took a while to spot some bugs... But I can finally be satisfied. The algorithm is able to drastically and rapidly improve its score and to find ways to survive forever.

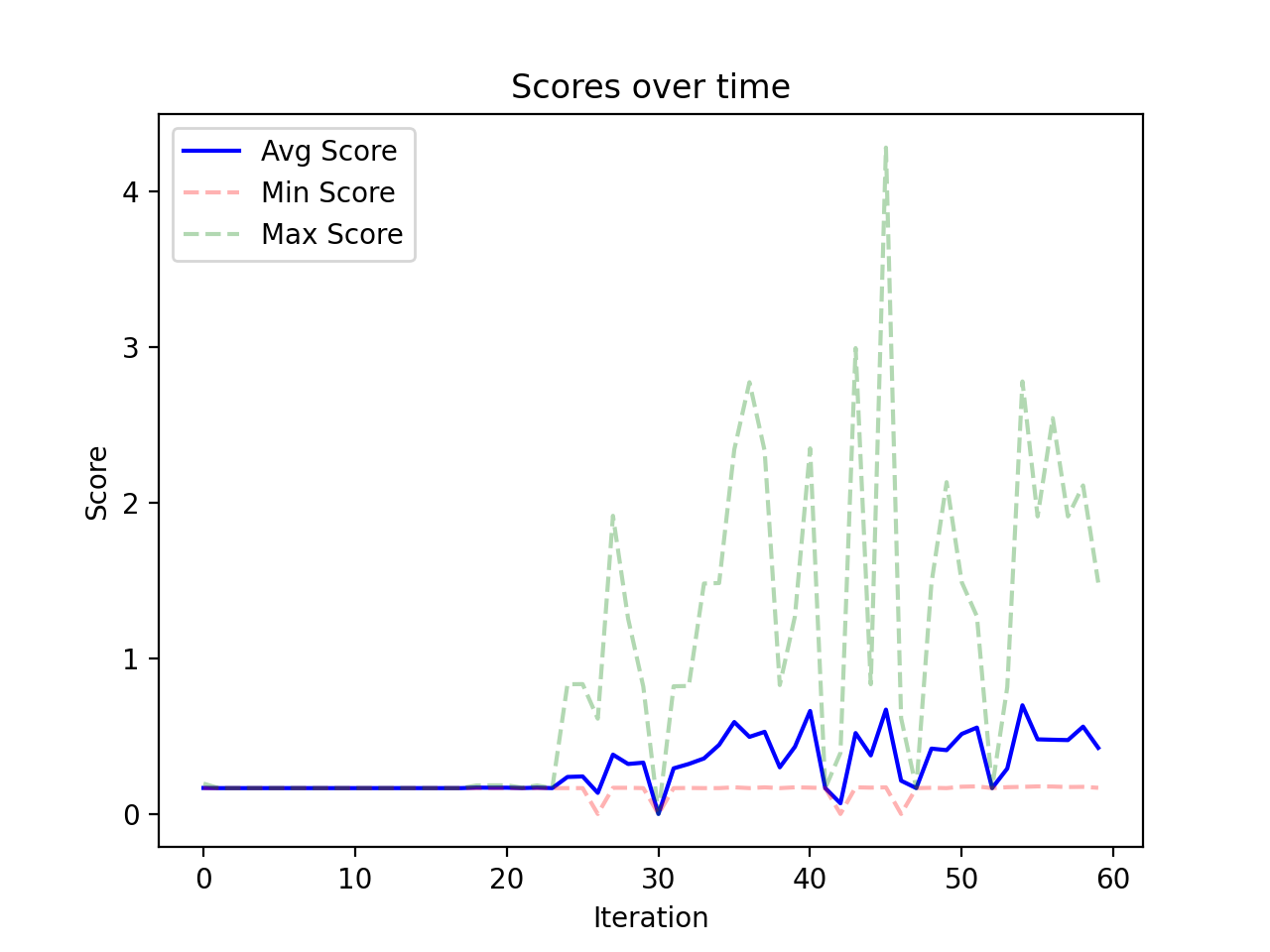

The average, minimum and maximum scores over time in a short training example:

A neural network that managed to reach incredibly high scores (roughly 500 tubes) in just 74 iterations: